Contribution Courts

A participatory approach to understanding contribution to results.

What is this all about?

‘Contribution Analysis’ is unlikely a concept that makes you sit up in your seat with excitement (though I really hope it does).

Instead it may feel like a dark art that happens over there somewhere by someone sitting in a tower, abstracted away from your work. Something hard to understand, that happens at your programme rather than with it.

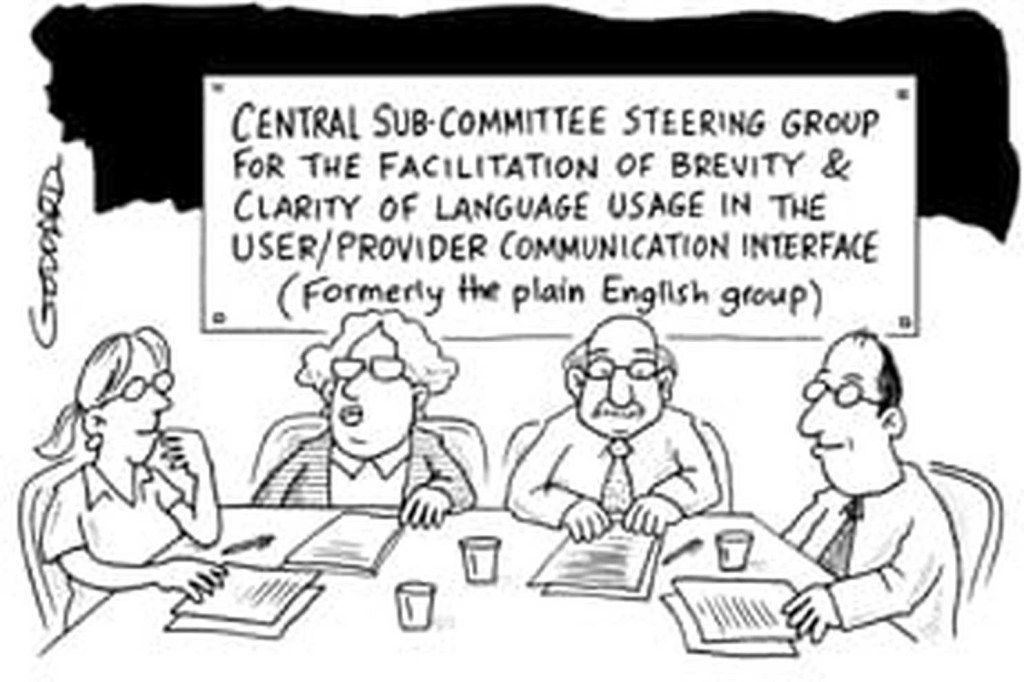

Well, that perception is entirely valid and justified, as historically this is often what happens. Quite frequently, someone considered somewhat nerdy sits with documents and a blend of evidence and figures out contribution to a result (yours or otherwise). They then come to some kind of decision about your programme and inform you of their judgement. As such, Contribution Analysis has become yet another aspect of Monitoring, Evaluation, and Learning (MEL) that feels inaccessible and sometimes threatening, and shrouded in jargon.

This is genuinely a shame, as development interventions in this era tend to reside in the domain of the complex: the end result isn’t entirely clear, and the pathway is highly complex. Further to this, we are now operating in a global context: each programme is trying to inject expertise and change in what are often crowded implementation spaces. The combination of these mean that it is crucial to understand whether and how your work contributed to an observed success or failure. It is important not just in feeding the LogFrame or Results Framework beast, but also for learning.

Understanding how your intervention contributed to something is also important for programmatic learning: we can see what was successful and why so that we can replicate it elsewhere. It is also important in times of failure, as it means we can understand how something didn’t contribute and why, so that we can try something different next time (or re-target efforts if a more successful programme is operating where you are). If collaborating with another actor, it helps you understand why that was important, and to what degree your work was pivotal to success. It helps you show why the whole is greater than the sum of the parts. Understanding the nuances of what connects what you’re doing to an observed success is therefore not a punitive or success scoring thing: it’s a learning thing.[1]

To get away from the jargon and tangle, and to do this in a way that is participatory, I have been playing with a method called Contribution Courts (Mark Oldenbeauving and I believe Tom Aston have been doing a lot of work with these so please check out their work – Contribution Courts are not mine, but I enjoy the approach!).

[1] For more details on complexity please see my complexity section.

What are Contribution Courts?

Contribution Courts are a participatory and interactive approach to understanding the degree to which a programme contributed to an evidenced result or change. This allows us to move away from traditional, more isolated and less engaging, methods.

Contribution Courts help us pull across an intuitive understanding of how we examine evidence and come to a conclusion: we are all familiar with what a court is, and how evidence is collected and fed into them.

At these ‘courts’, programme teams (in this case a member of the delivery team, a team leader, or similar) will present a Story of Change, case study, or result to a ‘jury’, making their case for how the programme contributed to a particular result, supported by evidence.[1] A monitoring and evaluation (M&E) adviser could act as a ‘prosecutor’, gently questioning contribution and challenging the programme team to further support their claim.[2] The ‘jury’ observe, and give verdict on the degree of contribution they have been persuaded.

[1] For example: let’s say you are delivering a programme funded by the FCDO in the climate resilience space. The ‘jury’ could be comprised of the FCDO SRO, a programme representative or two, and a critical friend/Troll facilitator who is familiar with the programme.

[2] For example: if you have staff from your organisation working on MEL for the programme, the ‘prosecutor’ could be someone from the broader MEL team due to having distance from the programme, or other members of the MEL team who are not associated. This permits for better cross-examination as they are separate to the programme and are better placed to ask objective questions. It is also fine if it is a MEL member from the programme team, as they have the benefit of knowing the evidence intimately. It ultimately comes down to what is important to you.

Why would I ever do this?

While it may feel like a gimmick, this approach has several benefits that I think are really powerful.

Participatory processes like this yield more learning for contribution analysis than other, more isolated, methods: by inviting those who have seen the changes first-hand into the process, you get the benefit of collaboratively investigating a claim and rounding it out. You get to discuss information and ask questions in a manner you wouldn’t otherwise.

Asides from being participatory, by using the model of a court (something we all understand), this approach helps teams climb inside of the contribution analysis process and have a better grasp of why it is important as a concept. It helps connect a very abstract methodology to something that is more easy to understand. Hopefully it also helps teams better understand the need for why evidence and data collection is important to making claims about success, and gain a sense of ownership of this process (‘MELnership’?).

Conclusions are determined together, reducing the feeling of evaluation happening at, not with, your team, and acting as a collective learning exercise for both successful and unsuccessful cases (i.e. we all understand and agree why something went well or badly).

It permits vertical learning and speaking truth to power, as the client or SRO can be included in the process in the jury: you get the opportunity for them to really understand the nuances of your stories, and be part of the process that determines success or failure.

How do I do this?

As stated, Contribution Courts are relatively straightforward. Simple identify a case study or story of change that requires a contribution assessment, and set up the ‘court’ according to the team structure. Find a results owner (it really needs to be someone close to the result and who saw it unfold) to present the narrative and identify their evidence, then source a ‘prosecutor’, such as an appropriate member of your MEL team. Finally, assemble your jury and select a date to engage in the process. Ensure someone is present to write up what occurs and formally record the collective contribution decision for your results framework, learning plans, or any other methods you’re using.

The event can be more or less formal depending on the characters involved and your relationships. Personally, with programme teams I know well, I always keep these things more informal. Ensure to have a scribe and the process is adhered to, but encourage folk to wear comfortable clothes and have snacks set out to keep energy levels up. Just because it’s called a court doesn’t mean it needs to instill the same level of fear. Having a result poked and prodded is intimidating enough, so ensuring a gentle tone is set is crucial to success and ensuring everyone feels comfortable.

Another thing to consider is how judgements are made. Contribution can be incredibly subjective if not structured. Personally, I think the way of helping the jury is to encourage them to use something like Tom Aston’s contribution rubrics. These can be printed out on paper and handed to them, and guide their collective decision making on strength of contribution. Rubrics are intended to help structure difficult judgements and so these naturally fit together in my mind, and Tom’s are really intuitive.

It is really important to have a practice run. Courts are intuitive (we have all seen Boston Legal or something similar), but this still doesn’t mean it goes smoothly immediately. It is best to do a dummy run at the start of the day with something inconsequential. Invite the results owner to present a story of how they came to be in that room today (or something similar like cooking a meal). The cross-examiner can then probe that (‘I see, so it was the combination of taking the tube and walking that got you to this building, but you spent the most time on the tube. Without it, could you still have got here?’ etc). The jury can then practice using the rubric in response. This allows for a light-hearted way to break the ice, but also means any confusions or misunderstandings are identified early.

For more resources, please seek out work done by those I mentioned earlier: Mark Oldenbeauving and Tom Aston. I have also included a little illustration of an example process behind how you might set these up.